Does Google Autocomplete Really Reveal Sexism?

U.N. Women recently started an ad campaign using Google’s autocomplete feature to demonstrate the pervasiveness of sexism worldwide. The campaign was covered in AdWeek, which is where I think most people first heard about it. It is undoubtedly true that sexism is a powerful force in pretty much every country on Earth and I think it is a moral imperative that we try to overturn that state of affairs. But that doesn’t necessarily mean that this particular instance means what people are making it out to mean. I think there’s good reason to be skeptical of what this campaign alleges.

Let’s start with a simple question: What kind of person begins a web search with a phrase like “women should” or “women need to”? Does that sound like the beginning of a search that a non-sexist person would enter? Well, maybe a non-sexist person would search “women should be paid as much as men.” But it’s probably more likely that that person would search “pay equity”, isn’t it? A phrase like “women need to” is prescriptive, and I would generally expect that you’re probably pretty sexist if you’re the kind of person who enters a search that essentially sounds like an order to an entire gender. This ad campaign is using loaded phrases that are predisposed toward a certain result; of course the autofills are sexist, the phrases they’re choosing are practically sexist to begin with!

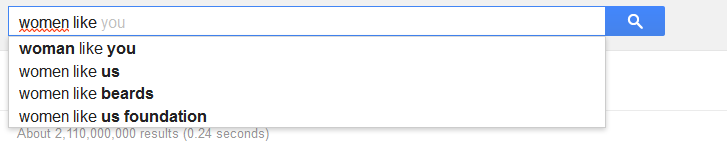

What happens when you try the same thing with less loaded search terms? Here’s the autocomplete I got for “women like”:

The first one is Google misunderstanding what I meant and suggesting a song. The 2nd and 4th results are for an “organization to encourage, empower and engage women and girls to make a difference locally and globally” according to Us Foundation web site. “Women like beards” is kind of funny and not especially sexist.

The first one is Google misunderstanding what I meant and suggesting a song. The 2nd and 4th results are for an “organization to encourage, empower and engage women and girls to make a difference locally and globally” according to Us Foundation web site. “Women like beards” is kind of funny and not especially sexist.

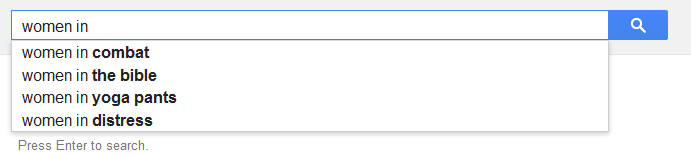

Here’s another more neutral search string I thought up:

“Women in yoga pants” seems to lead to a bunch of pages full of creepshots, so that’s definitely troubling. But “women in combat” and “women in the bible” aren’t especially sexist (depending on how they’re handled) and “women in distress” leads to web sites for an organisation that helps women who have been victims of domestic violence. Autocomplete suggests more reasonable responses to more neutral search strings.

“Women in yoga pants” seems to lead to a bunch of pages full of creepshots, so that’s definitely troubling. But “women in combat” and “women in the bible” aren’t especially sexist (depending on how they’re handled) and “women in distress” leads to web sites for an organisation that helps women who have been victims of domestic violence. Autocomplete suggests more reasonable responses to more neutral search strings.

I think you could take this a step further than I did above: if a search term starts by specifying a gender, wouldn’t you expect to be somewhat likely to get essentialising results? Unless you’re searching for a fairly specific topic where gender really is the determining factor, any search that seeks to yield results about half the population is probably going to return some pretty lousy results. [As an example of a specific topic where gender is a determining factor, “women in science” results in autocompletes that are entirely sensible.]

So does Google autocomplete really demonstrate a global problem with sexism? I’m not so sure it does, at least not on the basis of what’s presented in the U.N.’s campaign. Sexism certainly is a problem globally, but looking at search terms that are predisposed toward returning sexist results is probably not the best way to highlight the problem.